12 Customer Effort Score Questions to Use Right Away (with Examples)

TL;DR

- 12 effective Customer Effort Score (CES) questions based on best practices, delivered neutrally and timed correctly

- Three presentation types covered: numeric scale, open-ended follow-ups, and emoticon styles

- Recommendations include neutral wording, audience segmentation, proper timing, and brevity

- Use CES to detect friction, improve UX, streamline onboarding, support processes, product usage

Looking for questions to use in your next Customer Effort Score survey? Not sure where to start?

Good news, in this guide you’ll discover the best customer effort score question examples, how to structure a question, and all the tips you need to create a successful CES survey.

Let’s start from the basics.

What is the Customer Effort Score?

Definition: Customer Effort Score (CES)

Customer Effort Score (CES): A one-question metric measuring how easy it was for a customer to complete a specific task, often rated on a numeric or emoticon scale

Customer Effort Score (CES) is a feedback survey that measures how easy your customers find the interaction with your product or service.

It’s about the effort it takes for someone to use your product or interact with your customer service to find an answer to their question.

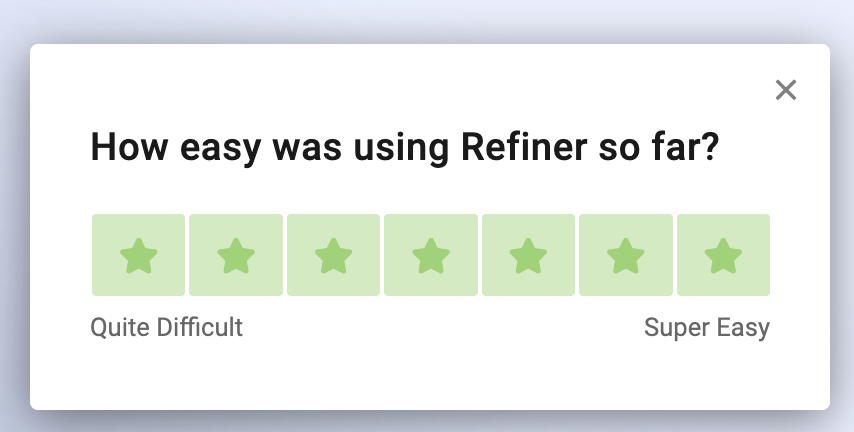

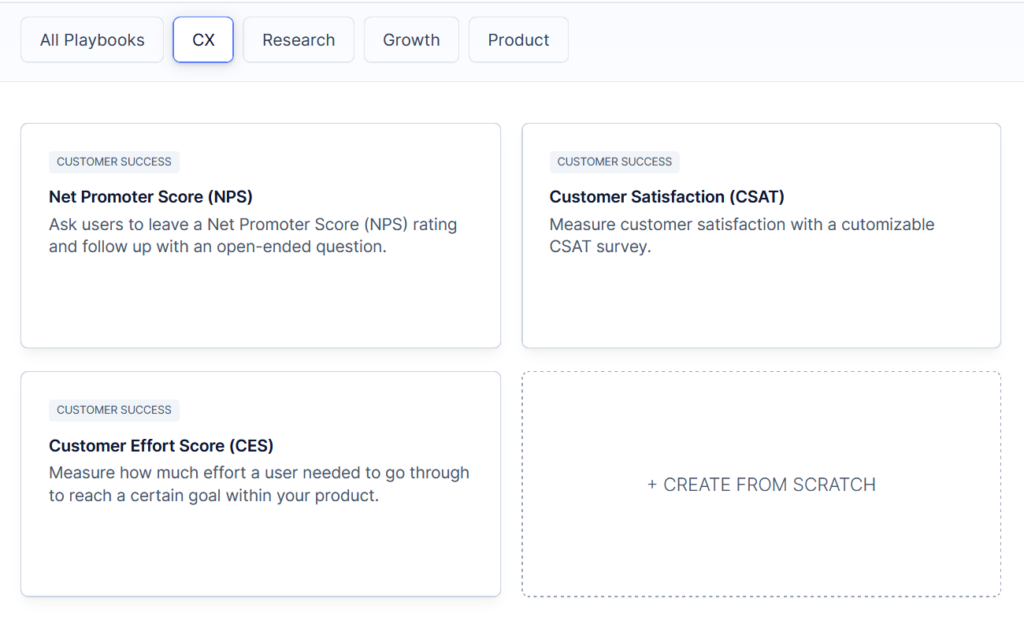

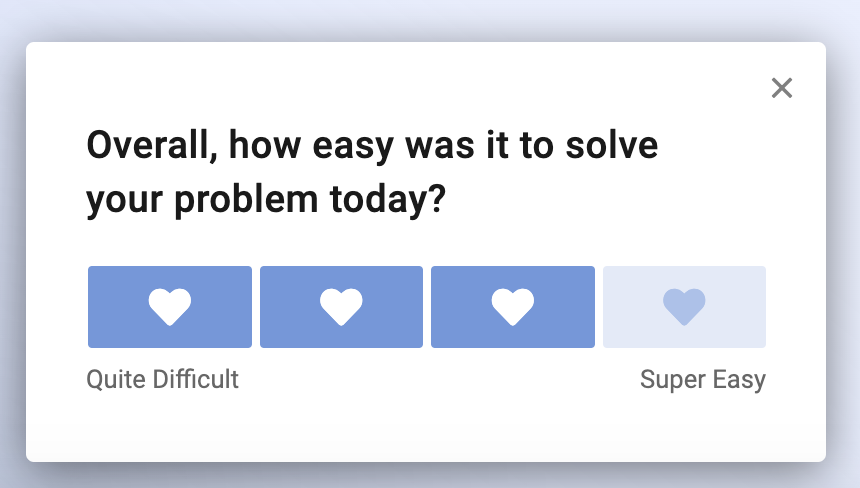

This is what a CES survey looks like.

How to Structure Customer Effort Score Questions

What makes a good CES survey question?

Here are four things to help you draft an engaging customer effort score question.

#1. Aim for simplicity

All customer feedback questions should aim for simplicity. When it comes to CES surveys specifically, you’re only focusing on learning more about the customer effort.

Your customer effort metrics will determine the way you’ll structure the question.

There’s no need to ask about customer satisfaction (CSAT) or explore ways to increase customer loyalty like in Net Promoter Score (NPS) surveys.

Once you know what you want to get out of the question, it’s time to work on your ask.

The simpler it is, the easier it gets to convince your customers to fill out the survey.

#2. Be clear with the wording

It’s easy to confuse your customers by asking too many things in one question.

Simple and straightforward questions tend to get a higher response rate as they simply feel easy to follow.

Here is an example of a direct and clear CES question.

How easy was using Refiner so far?

The question is short and simple. As a customer, you know what the company is asking from you, which makes it easier to respond to it.

#3. Avoid using the word ‘effort’

There’s no need to be too obvious with your ask. Yes, CES is all about measuring customer effort but your questions shouldn’t necessarily guide your customers towards the outcome you want to get.

For example, instead of asking them:

On a scale of 1-7, how would you rate the effort it takes to use our X feature?

You can choose:

On a scale of 1-7, how easy was it to use the X feature?

The second question sounds more natural and it has more chances to get the users interested in answering it.

#4. Decide on the way you’ll structure the question

There are two ways to structure a CES question – you can either ask a direct question or turn it into a statement.

Here’s an example.

Let’s say you want to ask your customers to rate the customer interaction they’ve just had with your brand.

Here are two different ways to find out with the CES survey.

Option 1:

How easy was it to interact with our team?

Option 2:

To what extent do you agree with the statement “[Product] made it easy to handle my issue”

It’s up to you to pick the sentence structure that works best for you and your audience – you can even A/B test to find the type of ask that leads to a higher engagement rate.

Three Different Ways to Present CES Questions

Now let’s look at three different ways to present a Customer Effort Score survey.

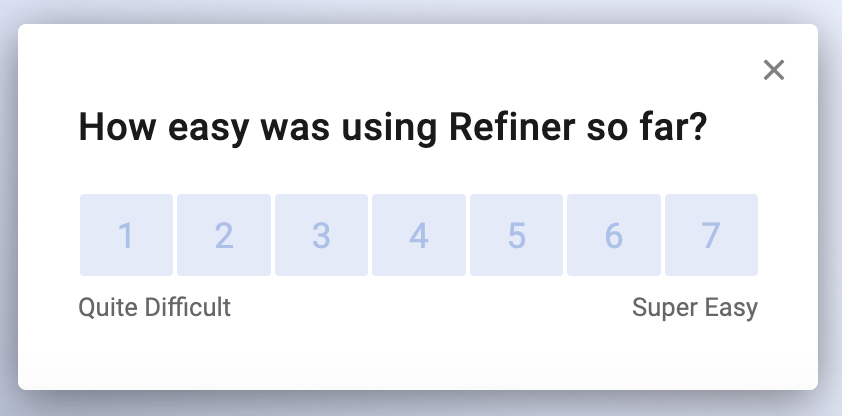

#1. Numerical scale

In this type of survey, you are measuring the customer effort with numbers – the higher the number, the higher the effort it takes for someone to complete an action.

For example, you can launch a survey on a 7-point scale to ask about the effort it takes to complete the onboarding experience.

A customer who picks 1 on the scale found the process very easy while someone who picks 5 had a harder time finalizing the onboarding.

In most cases, the scale includes five, seven or ten points but it’s up to you to choose what works best for your business.

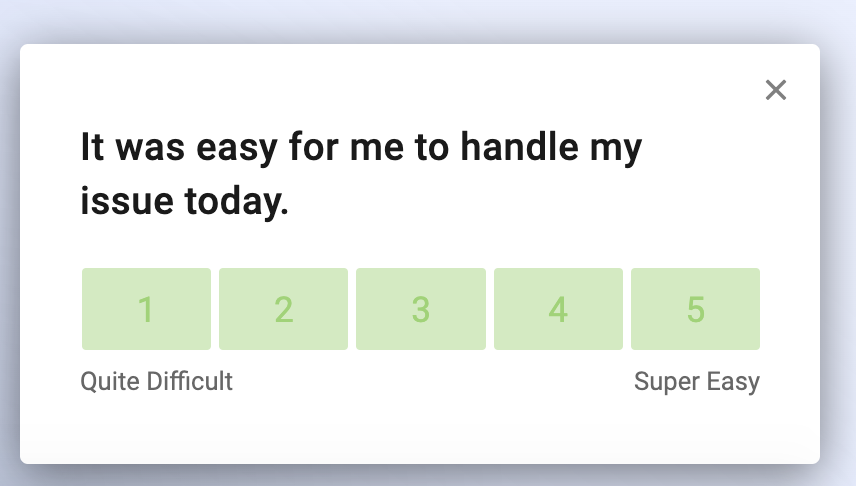

#2. Text-based scale

The text-based scale, also known as the Likert scale, is based on a statement that customers get to choose a response ranging from ‘strongly agree’ to ‘strongly disagree’ to reflect their opinion.

Let’s say you send this survey:

It was easy for me to handle my issue today.

The responses range from ‘strongly agree’ to ‘strongly disagree’ and you can get an average of the customer effort it takes for someone to interact with customer support.

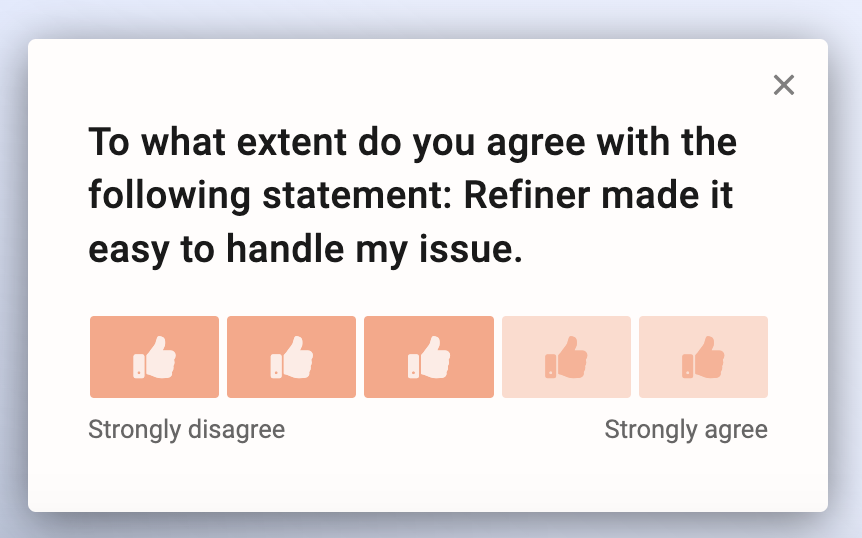

#3. Using emoticons

You can also launch a CES survey to associate the level of effort with different emoticons, such as smiley faces, hearts, or thumbs up.

There is still a numerical scale but in this case, you’re making the survey more visually appealing and probably easier to respond to.

PRO TIP: Refiner, a dedicated survey software, comes packed with templates that let you launch a CES survey in seconds.

12 Examples of Customer Effort Score Questions

Let’s look at real-life examples of customer effort score questions that you can use for your SaaS business.

Primary questions

This is a list of primary questions that you can even ask as they are in a quick CES survey.

How easy was using [Product] so far?

This is a popular question to use in a CES survey.

It’s simple and it’s making it easy to measure the level of effort it takes for your customers to use your product.

You can ask the question on a numerical scale or even use emojis to get users to pick the level of effort.

Overall, how easy was it to solve your problem today?

You can use this question to measure the customer effort when someone interacts with your customer success team.

Using the word ‘today’ makes the question more specific as you’re only asking about a particular instance and not just the overall customer experience – this can make it easier for your business to identify the touch points you need to improve.

To what extent do you agree with the following statement: [Product] made it easy to handle my issue.

This is a statement using the Likert scale to gather your customers’ views on the level of effort it takes to resolve an issue within your product.

Users get to pick on a scale ranging from ‘strongly agree’ to ‘strongly disagree’ while you can even number the scale to make it easier for everyone to understand the range of answers.

Was it easy to find the information you wanted on our website?

Here is a question you can ask to measure the ease of finding particular information on your website.

Let’s say someone was using your help desk to find an answer to a specific question. How easy was it to find what they were looking for?

The findings from this question can help you understand whether you need to improve anything in the information architecture to facilitate the user experience.

How easy was it to interact with our team?

This is another question to measure the level of effort it takes for someone to interact with your team.

In this question, you are mentioning ‘our team’ to make it more human-centered while you can even add a follow-up question to find out more details about their feedback or the particular person who assisted them.

Were the instructions you received during the onboarding stage easy to follow?

Interested in finding out how easy it is for someone to complete your onboarding journey? This is the right question for you.

The question is specific enough not to confuse your customers and the insights can help you review your onboarding process to ensure that more customers find it easy to complete.

How much do you agree with the following statement: [Product] made it easy for me to use the X feature.

You can launch a CES survey to gather feedback about a specific feature and how easy it is for someone to use it.

This statement gets users to pick from ‘strongly agree’ to ‘strongly disagree’ to rate the customer effort and you can even add a follow-up question to find out more about your customers’ thoughts on the particular feature.

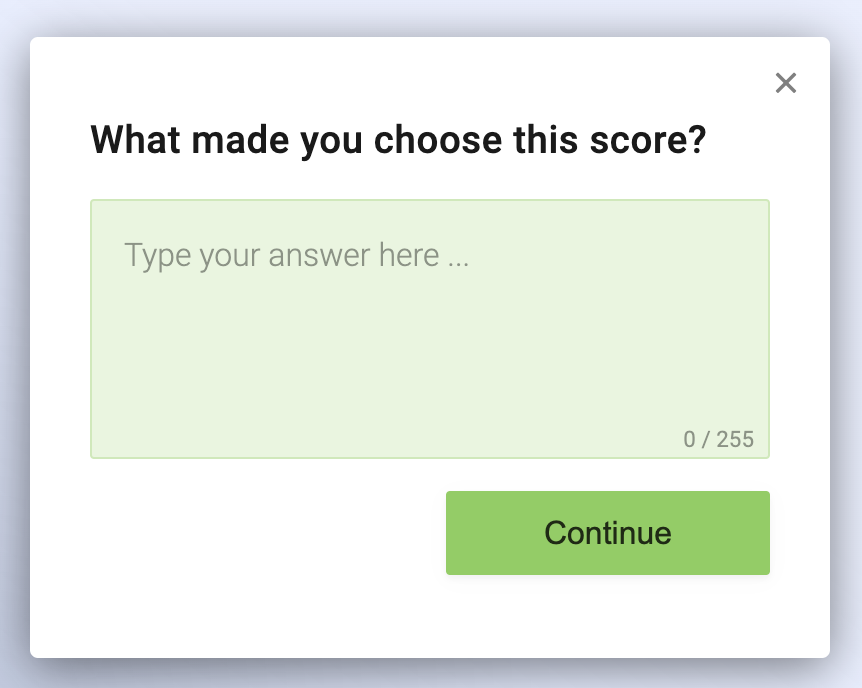

Follow-up questions

If you want to find out more details about your customers’ answers, you can add a follow-up question.

Here are some examples.

What do we need to do to improve your score by one point?

Let’s say you launch a CES question on a 7-point scale and a customer picks 4 as the level of effort it takes to use a particular feature.

You can add this follow-up question to find out more about their response and how you can improve it.

What made you choose this score?

Here is another way to ask for more details about the customer’s rating.

The follow-up questions help you find out more about the primary response without necessarily getting the users to spend too much time filling out the survey.

Would the X feature instead make your customer experience easier?

Imagine you are asking a primary question on the level of ease for someone to use the X feature.

If their response indicated that it takes quite some effort to use the particular feature, you can add a follow-up question to ask if another feature would improve their user experience.

Segmentation is important in this case as this question is only relevant to your most engaged users who know enough about your product and its features.

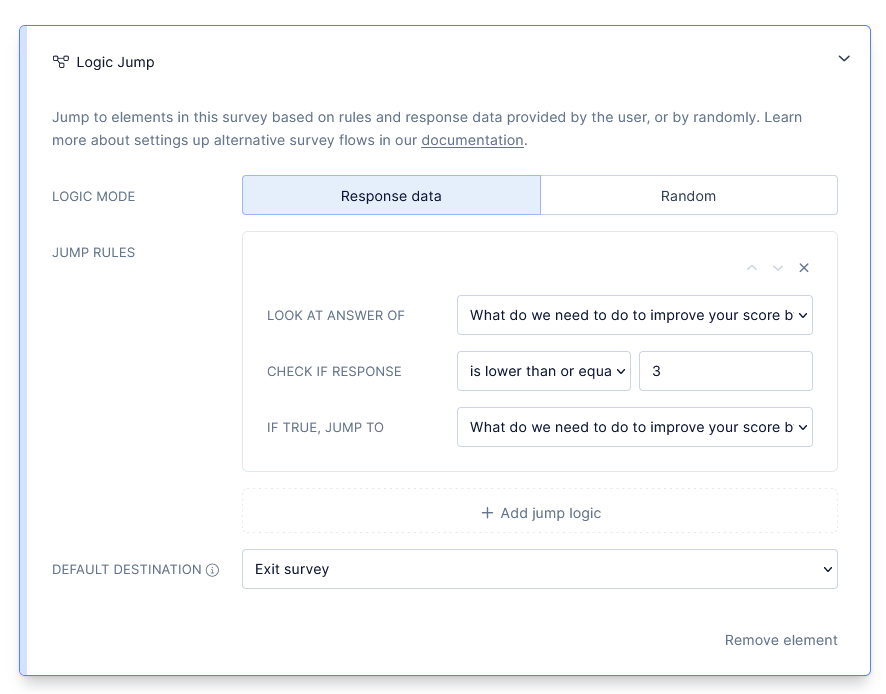

PRO TIP: Use survey logic to show different follow-up questions to different customers based on their answers.

For example, I could ask people who scored their effort with a value of three or less about what we could do to improve their rating. In Refiner, I can do that by simply setting up a survey logic like this:

Open-ended questions

If you want to give more space to your users to share their thoughts about the customer effort, you can add an open-ended question to your CES survey.

Here are two examples.

Do you want to add anything else?

Right after the primary CES questions, you can ask your customers to share any other thoughts they have on the level of ease to use your product.

This question allows them to expand as much as they want, which makes it a great opportunity for you to gather precious insights.

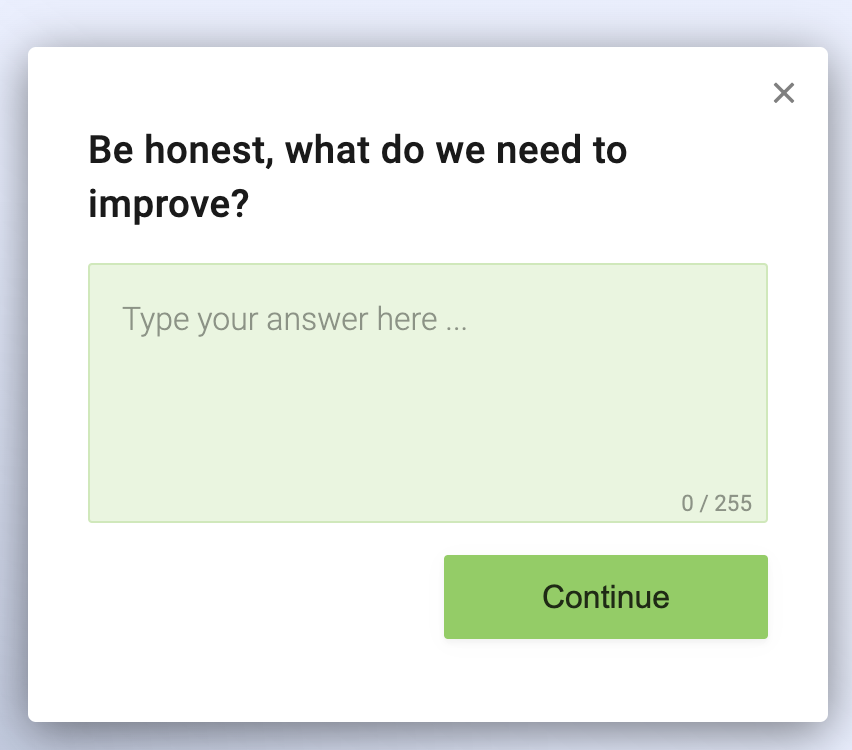

Be honest, what do we need to improve?

Another way to structure an open-ended question is to be direct.

By using “be honest” you are making it clear that you are open to all types of feedback in order to improve your product.

Best Practices When Creating a Customer Effort Score Question

Before you craft your next CES questions, here are four tips to help you create an effective CES survey.

#1. Be neutral

Your questions need to be objective to get the most out of the customer feedback. You can’t ask a question that makes it too obvious what you want to get out of it.

Structure the question in a way that sounds neutral to increase the chances of getting your customers engaged.

Imagine asking a question like this one:

We want to confirm how easy you found the onboarding process. Is there even anything we need to improve?

You don’t want to ‘confirm’ the level of effort it takes for someone to onboard as this implies that you start with your own assumptions.

You can structure it in a more impartial way:

How easy did you find the onboarding process?

#2. Segment your audience

Not everyone is at the same stage in the customer journey to respond to your CES question.

Segment your audience to make your questions more relevant and increase your response rate.

For instance, you can trigger a CES survey for your most engaged users to ask them about the level of ease to use a new feature.

Chances are, they already use your product enough to be in a place to submit honest feedback about your latest feature.

#3. Timing matters

It’s important to choose the right time to send a CES survey to your customers to get the most of the feedback.

Let’s say you want to send a CES survey to measure the level of effort it takes for someone to sign up for your product.

The best time to launch the survey is right after they sign up for the product to keep the question relevant to the process they’ve just completed.

#4. Don’t add too many questions

Having too many questions in your survey can affect your response rate as customers would be less interested in spending too much time filling out the survey.

Even one question can be enough to measure customer effort, provided you structure it in a way that makes the ask clear.

There you go…

Everything you need to know on how to structure a Customer Effort Score question and many examples of questions to help you create successful surveys.

Before you launch your next CES survey, make sure you have a final look at your questions.

Keep them simple and make the ask clear to increase your engagement rate.

Good luck!

Customer Effort Score Questions – FAQ

One per interaction is ideal, optionally followed by a single open-ended prompt for context.

Right after a task is done—like onboarding completion, support resolution, checkout—to capture fresh insights.

Yes, segmenting based on behavior or user type ensures the question is relevant and boosts response rate.

Absolutely, neutral phrasing avoids pushing users toward positive or negative responses, giving you cleaner data.

Numeric scales (1–5 or 1–7) work well for structured feedback, emoticons add friendly UX but may reduce precision.