When to Prompt an In‑App Survey (9 Triggers That Work)

TL;DR

- Ask in the moment, after a meaningful action, not on login or mid task.

- Match survey type to intent. CSAT and CES right after interactions, NPS on a recurring cadence with engaged users.

- Use event‑based triggers, not page views. Add a short delay, cap frequency, and respect cooldown windows.

- Keep it short, one to three questions. Show the survey in a non‑blocking UI unless the moment is critical.

- Use audience filters. Only ask people who did the thing you are asking about.

- Steal the decision table and the copy templates below. Then map each recipe to Refiner triggers and throttles.

Oh, let’s face it: You do not get great feedback by throwing your survey at users whenever you please.

You get it by asking at the right moment, in the right context, to the right people.

That’s it.

But it’s so easy to miss it.

For example, early on, I thought its survey copy that makes or breaks the results. Then I watched two almost identical surveys behave completely differently, one landing a few seconds after success, the other popping up mid task.

And that is when it clicked for me: The difference was timing. Its all about when you prompt an in-app survey.

So, in this guide I will show you exactly when to prompt and trigger in‑app surveys, how to avoid interrupting flow, and how to set it all up in Refiner.

The one thing to remember:

If you can only remember one rule, trigger in‑app surveys immediately after a user completes a relevant task or hits a milestone. Add a small delay, five to fifteen seconds, so you do not interrupt the moment of completion. Set a global cooldown so the same person is not asked again for a while. Never prompt in the middle of a task. That is it.

When To Trigger In-app Surveys, A Practical In-app Timing Playbook

Most people start designing surveys with questions.

I don’t. I start with the moment.

Because, as the old adage goes: Ask too early and you get noise, ask too late and the memory fades.

By defining the moment first, I can quickly identify what to ask, when, which in-app survey type to use, etc., and always hit the bullseye.

And that’s what you’ll learn below.

This playbook gives you a simple way to decide when to ask, every time.

And it’s super simple to use:

- Start with the goal, like, onboarding, feature fit, loyalty, support, churn.

- Map that goal to the right survey type. Anchor it to a real event, a checklist complete, export success, ticket closed, cancel started.

- Then add a small delay, set a cooldown, and target only people who lived that moment.

- Finally, add conflict rules so you never stack prompts. Do this and timing stops being a guess.

How to use this table

- Find your goal. 2) Use the survey type listed. 3) Attach to the trigger event. 4) Use the delay and cooldown as a default. 5) Only target the audience that experienced the event. 6) Start with the sample copy and adjust.

Here it is.

| Goal | Survey Type | Trigger Event | Delay | Cooldown | Audience filter | Sample copy |

|---|---|---|---|---|---|---|

| Onboarding quality | CES or short CSAT | Onboarding checklist completed, or first key action done | 5–15 s | 14–30 days | New users who completed the checklist or key action | “Quick one, how easy was onboarding today?” |

| Feature satisfaction | CSAT, 1 question | First or 3rd use of a specific feature | 5–10 s | 30–90 days | Users who used the feature | “Did [Feature] help you get the job done?” |

| Bug or friction detection | CES | Repeated error, failed action, rage‑click pattern | 0–5 s after recovery | 14–30 days | Users who hit the error and continued | “That looked rough. How hard was this to complete?” |

| Loyalty tracking | NPS | After 2–3 active weeks and only for engaged users | None or 5 s | 90–180 days | Users with recent activity and multiple sessions | “How likely are you to recommend us to a friend or colleague?” |

| Support experience | CSAT | Helpdesk ticket or chat closed, first reply resolved, or doc viewed for 60+ seconds | 0–10 s | 30–90 days | People who engaged with support | “How did we do on your support request?” |

| Churn reasons | Exit survey | Entered cancellation flow, or plan downgraded | Inline in flow | N/A, single shot | Users in cancellation or downgrade | “What is the main reason you are leaving today?” |

| Inactivity risk | Pulse survey | 14–30 days since last key action or last login | Upon return, 5–10 s | 30–60 days | At‑risk users who come back | “Welcome back. Are you still trying to [job to be done]?” |

| Pricing friction | Short CSAT or 1 open | Hit paywall or pricing page 2+ times without converting | Exit of page, 5 s | 30–60 days | Evaluators, not paying customers | “Did pricing make sense for your use case?” |

| Mobile ratings | Store rating prompt | After a success moment, 3+ sessions, no crashes | End of session | 90–180 days | Mobile users meeting criteria | “Enjoying the app so far?” |

But naturally, there are a few other factors that are always good to keep in mind when you’re planning your in-app survey.

Here’s everything you need to know about that.

What in-app survey triggers make timing work?

Most timing advice says “ask after X.” But that’s really just a direction, not a system.

The system lives in what we call trigger mechanics.

This is where response rates jump, and where you avoid annoying people.

Using trigger mechanics is all about event‑based triggers, adding small delays, re‑contact windows, using audience filters, sampling, conflict rules, placement, time‑of‑day, and tone.

Get these wrong and a good survey flops. Get them right and you can run multiple surveys in parallel, quietly, without hurting product flow.

Below is how I set them up, with defaults you can copy. Start here, then tune with your own data.

Event‑based beats page‑based

A page view is a weak signal. An event is a strong one. Track explicit actions, create named events, and use those events to trigger the survey. Create events like Onboarding_Checklist_Completed, Feature_X_Used, Export_Success, Plan_Downgrade_Started, Support_Ticket_Closed. Attach the survey to the event, not to an arbitrary page URL.

Add a small delay

Completion is the high point. Interrupt immediately and you steal the moment. Delay five to fifteen seconds. Let the brain breathe. Give them a beat to feel the success, then ask about it. If you are prompting after an error recovery, go shorter. If the event happens at logout or session end, show at exit.

Cooldowns and frequency caps

Two layers work best. Set a global cooldown, for example, no more than one survey per person every 14 days. Then, add per‑type caps, for example, NPS no more often than every 90 to 180 days. You can also set per‑survey caps, for example, a user should not see the CSAT for Feature X more than once per 30 days. This keeps your sample clean and avoids survey fatigue.

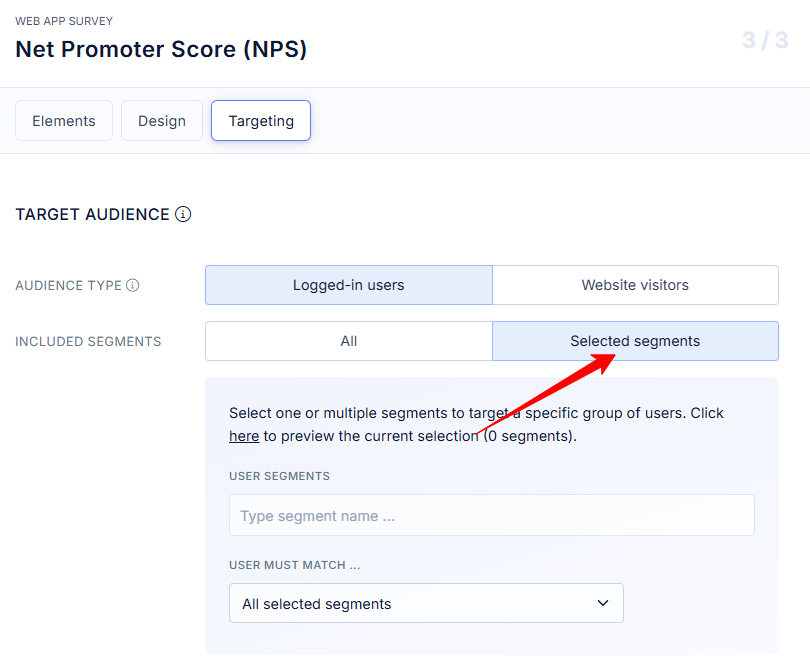

Audience filters and segmentation

Only show a survey to people who did the thing. Use attributes and segments. New users only. Customers on specific plans. Users who touched Feature X two or more times. People who have not responded to NPS yet. The narrower the audience, the more useful the data.

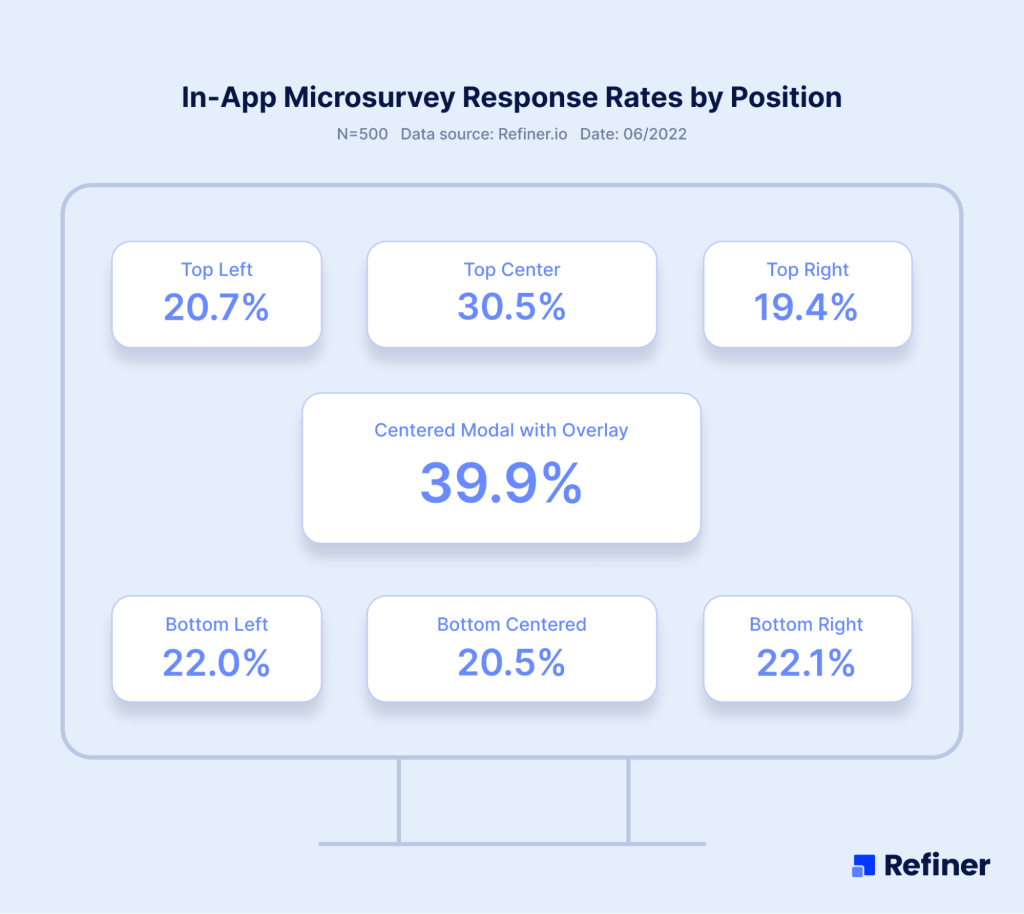

Placement and UI

Modals feel heavy. Slide‑ins and bottom sheets are lighter. I use modals only when the outcome is critical, for example, exit reasons in a cancellation flow. For CSAT and CES, a small slide‑in or an embedded widget works well. Keep the copy tight. Use clear primary and secondary actions.

Length (short always wins!)

Don’t go beyond one to three questions. If you need more, move the rest to a follow‑up channel, like email, or use branching logic. The goal in‑app is speed and context, not depth.

Sampling strategies

You do not need to ask everyone. Randomly sample a percentage of eligible users. Sample by segment, for example, new users at a higher rate than power users. Rotate among multiple CSAT targets so you do not stack surveys in a single session.

Avoid conflicts

You will have multiple surveys at once. Add rules that prevent stacked interruptions. For example, never show a second survey within the same session. Never show two surveys of the same type in a week. Always prioritize cancellation and support CSAT over generic prompts.

Time of day, session context, and device

In B2B products, weekday daytime often performs best. If your analytics show that users complete key work at specific hours, queue the survey to appear during those windows. For mobile, prefer end of session. Respect smaller screens and touch targets.

Language and tone

Be human. Acknowledge the moment. Avoid jargon. Avoid apology unless an error occurred. Say thank you. Make the survey feel like part of the product, not an interruption.

What in-app timing mistakes should I avoid?

These mistakes destroy response rates and trust.

I learned that the hard way.

- Login prompts feel efficient. They ask people to rate an experience they have not had, so you get polite guesses.

- Mid‑task pop‑ups feel timely. They rip attention away from the job, and frustration skews every answer.

- Early NPS feels proactive. It measures brand curiosity, not loyalty.

- Long forms feel thorough. They turn a two‑second moment into a chore.

- Repeating prompts feels like persistence. It erodes goodwill fast.

- Asking about a feature to people who never touched it feels like broad research. It fills your spreadsheet with noise.

- Stacking two surveys in one session feels like speed. It trains users to close everything on sight.

- Incentives feel helpful. They can push ratings up in a way your team cannot reproduce later.

I have made each of these calls at least once.

You do not have to.

- Prompting on first login. Think about it, your users have not done anything yet. There is nothing to rate. Wait for a milestone.

- Interrupting mid task. You stall momentum and you bias responses toward frustration. Prompt after completion or at exit.

- NPS too early. Asking for likelihood to recommend before real usage leads to vanity scores and bad decisions.

- Long forms in‑app. Save the long research survey for email. Keep in‑app short, focused, and contextual.

- Hitting the same user repeatedly. Nothing kills trust faster. Use global cooldowns and per‑type caps.

- Generic audiences. Asking about a feature to users who never used it will produce noise. Filter by event.

- Stacked prompts. Two surveys in one session is a quick path to churn. Use conflict rules.

- Bribing the moment. Incentives can bias ratings. If you use them, disclose them clearly and avoid tying them to positive responses.

What copy should I use for each moment? (9 killer in-app survey copy templates)

ere are the exact words I reach for in real products.

These templates cover the key moments, onboarding, feature use, error recovery, support, NPS, churn, and more.

Use them as a starting point. Replace the placeholders with your events, features, and product language.

Keep it conversational and fast. One to three questions. Ask only people who just had the moment.

Post‑onboarding CES

- Title: Quick one about onboarding

- Question: How easy was it to get started today?

- Scale: Very difficult, Difficult, Neutral, Easy, Very easy

- Follow‑up (conditional on Difficult or Very difficult): What made it hard? One sentence is perfect.

- Thank you: Thanks for the insight. We use every response to improve onboarding.

Feature CSAT

- Title: Feedback on [Feature]

- Question: Did [Feature] help you get the job done?

- Scale: Not at all, A little, Mostly, Completely

- Follow‑up (conditional on Not at all or A little): What was missing?

- Thank you: Thanks, this helps us prioritize improvements.

Error recovery CES

- Title: That did not look fun

- Question: After that hiccup, how easy was it to complete your task?

- Scale: Very difficult to Very easy

- Follow‑up: What should we fix first?

- Thank you: We will dig in. Appreciate the nudge.

NPS

- Title: A quick question

- Question: How likely are you to recommend us to a friend or colleague?

- Scale: 0 to 10 numeric scale

- Follow‑up: What is the main reason for your score?

- Thank you: Thanks for helping us build a better product.

Support CSAT

- Title: How did we do?

- Question: How satisfied are you with the help you received?

- Scale: Very dissatisfied to Very satisfied

- Follow‑up: Anything we should have done differently?

- Thank you: Thanks for the feedback. We review every response.

Cancellation exit survey

- Title: Before you go

- Question: What is the main reason you are leaving today?

- Choices: Too expensive, Missing features, Switching to a competitor, Not using it enough, Other

- Follow‑up (Other or Missing features): What feature or change would have kept you?

- Thank you: Thank you for the honesty. It really helps.

Inactivity pulse

- Title: Still working on [job to be done]?

- Question: Are you still trying to [primary outcome] with [product name]?

- Choices: Yes, weekly. Yes, sometimes. Not at the moment.

- Follow‑up: What would make [product name] useful again?

- Thank you: Thanks for the signal. We are always listening.

Pricing friction check

- Title: Did pricing make sense?

- Question: Did our pricing make sense for your use case?

- Scale: Not at all to Completely

- Follow‑up: What did not fit?

- Thank you: Thanks, this helps us improve the plan structure.

Mobile rating prompt

- Title: Enjoying the app?

- Question: Would you rate us in the store?

- Actions: Rate now, Not now

- Thank you: Thanks for supporting the team.

7 in-app survey timing recipes

Theory is useful. But shipping relies on recipes.

These are the exact setups I use in real products.

Each one tells you: the trigger event, who sees it, the delay, the cooldown, the survey, and the copy.

Pick the one that matches your goal, wire it to your events, and ship. Start with the defaults, then adjust based on your data.

If you only need a quick win, try #1 for onboarding or #5 for NPS. If you need to catch friction, start with #4. If churn is the question, jump to #7.

Let’s get specific.

1) Onboarding completion check

- Trigger:

Onboarding_Checklist_Completed - Who sees it: New users who completed at least four tasks in the checklist within their first 7 days

- Delay: 10 seconds after completion

- Cooldown: 30 days

- Survey: CES, one question with open follow‑up for difficult ratings

- Copy: “Quick one, how easy was onboarding today?”

- Why this works: Momentum is high. Users can still recall the friction points. You capture the first impression with minimal bias.

2) Feature X satisfaction

- Trigger:

Feature_X_Used3 times within 48 hours - Who sees it: Active users on Plans Pro and Enterprise, excluded if they answered this survey in the last 60 days

- Delay: 5 seconds after the 3rd use

- Cooldown: 60 days

- Survey: 1‑question CSAT with open follow‑up on low ratings

- Copy: “Did [Feature] help you get the job done?”

- Why this works: Three uses separate dabblers from real users. You get feedback from people who actually relied on the feature.

3) Export success moment

- Trigger:

Export_Successevent fired - Who sees it: Users who successfully exported a report to CSV or PDF for the first time

- Delay: 8 seconds

- Cooldown: 90 days

- Survey: CSAT, short

- Copy: “How satisfied are you with the export experience?”

- Why this works: Exports finish a mini journey. The memory is fresh, the feeling is clear.

4) Error recovery CES

- Trigger:

Error_Shownfollowed byTask_Completedin the same session - Who sees it: Users who hit the error state and still completed the task

- Delay: 3 seconds after completion

- Cooldown: 30 days

- Survey: CES

- Copy: “After that hiccup, how easy was it to complete your task?”

- Why this works: You acknowledge the bump and focus on effort. Great for prioritizing fixes.

5) Quarterly NPS for engaged users

- Trigger:

Weekly_Active_Userfor 2 consecutive weeks and 5+ sessions in the last 30 days - Who sees it: Customers with activity and billing in good standing, exclude anyone who saw NPS in the last 90 days

- Delay: End of session

- Cooldown: 90–180 days

- Survey: NPS with open follow‑up

- Copy: “How likely are you to recommend us to a friend or colleague?”

- Why this works: You ask only people who know the product. Scores reflect reality, not first impressions.

6) Support CSAT on ticket close

- Trigger:

Support_Ticket_Closedwith resolution code Success - Who sees it: Anyone who interacted with support in the last 24 hours

- Delay: Immediately after the chat window closes or within a few seconds of page return

- Cooldown: 60–90 days

- Survey: CSAT

- Copy: “How did we do on your support request?”

- Why this works: The experience is fresh. You capture both speed and quality.

7) Cancellation reason capture

- Trigger:

Plan_Cancel_Started - Who sees it: Users who enter the cancellation flow

- Delay: Inline, inside the flow

- Cooldown: N/A

- Survey: One required choice with optional open follow‑up

- Copy: “What is the main reason you are leaving today?”

- Why this works: You get real reasons, at the moment of truth, without extra emails.

How does in-app survey timing differ on mobile vs web?

Mobile and web follow the same rules. The way you apply them changes.

On mobile, sessions are shorter and interrupted. I ask at success moments or the end of a session, never on first launch.

On web, focus windows are longer. I use small slide‑ins with a short delay so I do not get in the way.

Here is how I adjust timing and UI between the two.

- Session shape. Mobile sessions are shorter. Prompt at success moments or end of session. Avoid first launch. Avoid mid task.

- Real estate. Use bottom sheets or small banners. Keep text tight, friendly, readable.

- System prompts. For store ratings, only show after clear success. Never after a crash. Respect the platform rules. Space prompts well.

- Offline and flaky connections. Queue the survey until the app is online. If you miss the moment, show at next open with a short preface like “Quick one from last time.”

- Distribution of devices. Older devices and small screens need larger tap targets and fewer steps.

And that’s it…

Great in‑app survey timing is not magic. It is event selection, delay, and discipline. Ask right after the moment. Keep it short. Cap frequency. Target the audience that lived the experience. Then wire it with clear triggers and guardrails so it runs quietly in the background while your team ships product.

Steal the table, ship one or two recipes this week, and iterate. If you are using Refiner, you have everything you need to do this today.

Want to learn more about in-app surveys?

I’ve been sharing my experience with in-app surveys here for quite some time now, and here are my best guides that will help you take your in-app surveys to a whole new level:

- In‑app survey examples: https://refiner.io/blog/in-app-survey-examples/

- In‑app feedback best practices: https://refiner.io/blog/in-app-feedback-best-practices/

- Best time to send NPS: https://refiner.io/blog/best-time-to-send-nps-survey/

- In‑app CSAT: https://refiner.io/blog/in-app-csat/

- In‑app CES: https://refiner.io/blog/in-app-ces/

- Trigger Events docs: https://refiner.io/docs/kb/in-product-surveys/trigger-events/

- Recurring Surveys: https://refiner.io/docs/kb/in-product-surveys/recurring-surveys/

- Schedule and Throttle: https://refiner.io/docs/kb/in-product-surveys/schedule-and-throttle/

- Restrict Locations: https://refiner.io/docs/kb/in-product-surveys/show-survey-at-specific-locations/

In-app survey timing – FAQ

After someone has real experience, not day one. My default is two to three active weeks first, then every 90 to 180 days. Ask at end of session so you do not interrupt work.

In B2B, working hours perform well. Start with weekday daytime in the user’s time zone, then check your analytics and adjust.

Short. One to three questions. In‑app is about context and speed. If you need depth, add a single open follow‑up or send a longer email survey to a subset.

No. Set global cooldowns and per‑type caps. Protect your users from fatigue and protect your data from bias.

Five to fifteen seconds covers most cases. Shorten to three seconds after an error recovery. For end of session events, show at exit without delay.

Do not prompt mid task. Use small UI, slide‑ins over modals, and short copy. Keep the controls friendly and easy to dismiss.

Yes. Use audience filters and segments. Ask admins about admin tasks. Ask editors about editor flows. Ask free users different questions than enterprise accounts.

Set priority rules. Support CSAT and cancellation exit prompts win over generic questions. Prevent more than one survey per session. Space out schedules.

Well, this is tricky. Incentives can boost completion, but they can also bias results. If you use rewards, disclose them and avoid tying them to positive ratings.

Check the moment first. Are you asking at the right time, to the right people? Then shorten the survey and simplify the UI. You can bump sampling temporarily, but fix timing before you crank volume.