7 In-app Survey Examples to Follow

TL;DR: In-app survey examples

- This guide includes 7 real-world in-app survey examples, each with specific triggers, goals, and ready-to-use questions.

- You’ll learn when to launch surveys like NPS, onboarding feedback, feature satisfaction, CSAT, CES, and more.

- Each example includes practical context, ideal timing, and why it works — so you can adapt it to your own product immediately.

Most articles about in-app surveys focus on one thing: the questions.

Well, that’s not what this one is about.

You see, I want to show you how in-app surveys actually get used inside products:

- Where to trigger them.

- What kind of feedback to collect and when.

- And how to do it without annoying users (this one is big!).

So, this isn’t about theory or listing questions you could ask. It’s all about showing you in-app survey examples you can use.

So, let’s do it.

What Makes a Great In-App Survey

DEFINITION: What is an in-app survey?

An in-app survey is a short feedback prompt shown inside your product, usually after a user takes a specific action. It helps capture insights while the experience is still fresh.

Before we jump into examples, let’s get clear on what sets great in-app surveys apart from the noise. Based on years of experience, here’s how I break it down:

1. Clear objectives

I always start with, why do I need this survey? What do I want to learn? Am I curious about onboarding friction, feature satisfaction, churn triggers, or engagement? Starting with a clear objective lets me design a survey that actually provides answers, not just a handful of random data points.

2. Timing

I know it may sound cliche but it is true: A survey is only as good as its timing.

Drop it too early, and you’ll show it to users who haven’t formed an opinion yet. Trigger it too late, and the context is gone. I aim for meaningful moments, like when someone finishes onboarding, uses a new feature for the first time, or cancels (this one is hurtful, though.)

That’s when their input is most accurate and actionable, and that’s also when they’re most likely to share it. After all, you are asking for something that’s in the context of their most recent action.

3. Difficulty (or actually, lack of it)

No one wants to fill out a wall of text. The easier it is to answer, the more likely people will respond. That means short questions, a maximum of 2, and quick tap or click-type formats. At Refiner we refer to this as “easy and effortless”.

4. UX

Surveys should feel like part of the app, not a jarring interstitial. That means matching your UI design, tone, and placement. If it looks awkward or branded differently, users bounce. Design consistency matters.

5. Targeting

I don’t blast the whole user base with every survey. Instead, I segment: new users, power users, churn candidates, etc. Better context equals better data.

6. Closing the loop

Collecting feedback is useless if users never see action. I make sure we show follow-ups like “Thanks! You told us onboarding felt long, so here’s what we’re improving.” It builds trust, encourages future participation, and shows the app is listening.

This is the mindset I use before launching any in-app survey. With objectives, timing, brevity, native design, audience targeting, and follow-up in place, you’re set up to collect quality input and not just noise.

7 In-app Survey Examples

Why use in-app surveys?

In-app surveys give you fast, contextual insights that analytics can’t. You learn what’s working, what’s confusing, and what’s missing, all while the user is still engaged.

First, let me show you how to read those in-app survey examples.

And so, each example below follows a simple structure.

First, I’ll give you a short intro to the use case, you know, when to use it, why it matters, and how it plays out in the real world. Then, I’ll break it down into four parts:

📍 Trigger: When the survey should appear.

🎯 Goal: What you want to learn from it.

💬 Example Question(s): A sample or two of what to ask in this particular survey.

✅ Why It Works: A quick insight into why this survey is effective.

So, without any further ado…

Example 1: In-App NPS Survey

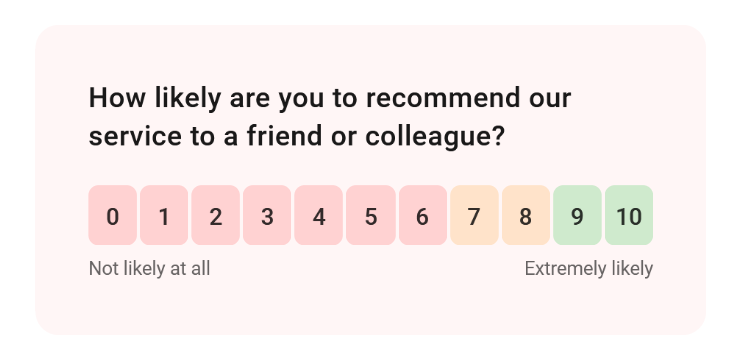

Definition: What is NPS?

Net Promoter Score is a simple survey that asks users how likely they are to recommend your product. It’s scored from 0 to 10 and helps track overall satisfaction and loyalty over time.

This is the most common type of in-app survey, and that’s for a good reason.

NPS (Net Promoter Score) is simple, fast, and widely understood. It helps you get a pulse on overall user sentiment and their loyalty without overcomplicating things.

You can use it early in the product lifecycle to establish a baseline, and later to track the impact of major changes. But NPS only works if it’s framed and timed well. Ask too early, and you get noise. Ask too late, and the user’s emotional connection to your product fades.

Here’s how I usually approach it:

📍 Trigger: After a user has been active for 7–10 days, or after they complete a key milestone (like onboarding).

🎯 Goal: Gauge overall satisfaction and loyalty. Establish a baseline for product sentiment and track changes over time.

💬 Example Question(s): NPS uses a simple question: “How likely are you to recommend this product to a friend or colleague?”

You can frame it in many ways, like mention the product name, etc. But the key is that you’re evaluating the person’s loyalty based on their likelihood of recommending you to others.

Optional follow-up: “What’s the main reason for your score?”

✅ Why It Works: NPS is a familiar format and quick to answer. When timed right, it gives you a clean signal on how users feel about your product. It’s especially useful for spotting early signs of churn or measuring the impact of recent changes. Plus, once you segment scores (Promoters, Passives, Detractors), you can trigger targeted follow-ups that improve retention or collect testimonials.

Example 2: Onboarding Survey

You only get one chance to make a first impression. That’s why I always pay close attention to what happens during onboarding. It’s where users form their first opinion about your product. It’s also where most drop-offs happen.

A good onboarding survey can tell you where people get stuck, what’s unclear, and what expectations they had coming in. It helps you figure out if the value you think you’re delivering is actually landing.

I don’t just want to know if they finished the onboarding flow. I want to know how it felt. Was it confusing? Did they get what they needed? Was something missing? These are questions that analytics won’t answer on their own.

Here’s how I recommend you run an onboarding experience survey:

📍 Trigger: Right after the user completes onboarding or setup. Alternatively, a day later to give them a chance to reflect.

🎯 Goal: Understand friction points, unmet expectations, or missing guidance during onboarding.

💬 Example Question(s):

- “Was there anything confusing about the setup process?”

- “What did you expect to happen that didn’t?”

- “How clear were the next steps after signing up?”

- “Is there anything you wish we explained better during onboarding?”

✅ Why It Works: You’re catching users at a critical moment, while the experience is still fresh. The questions are specific enough to guide improvements but open enough to surface surprises. It also shows new users that you’re listening, which builds trust early.

Example 3: Feature-Specific Feedback Survey

This one’s a go-to anytime you ship something new. You’ve built a feature, you’ve shipped it, and now you need to know if it’s actually working for users.

The goal here isn’t just to find out if they like it. You’re trying to understand if the feature is clear, useful, and solves the problem it was meant to. Sometimes the answers are surprising. People might be confused by the UI. Or they might use it in a way you didn’t expect.

These surveys help you move fast without guessing. I’ve run them to validate features right after launch, or even months later when usage picks up. If you ask the right questions, they can tell you whether to keep iterating or move on.

Here’s how I set it up:

📍 Trigger: Right after the user engages with a new or updated feature for the first time.

🎯 Goal: Understand if the feature meets user expectations and provides value. Identify confusion or points of friction.

💬 Example Question(s):

- “Was this feature useful to you today?”

- “What were you trying to do with this feature?”

- “Did anything feel unclear or confusing?”

- “Is there anything you wish this feature did differently?”

✅ Why It Works: You’re asking for feedback while the experience is fresh. That’s when users can give the most specific input. Plus, targeting users who just used the feature means the responses are grounded in real usage, not vague impressions.

Example 4: Time-to-First-Value Feedback Survey

Time-to-first-value is one of the most important moments in any product experience. It’s when the user first “gets it,” when they do something meaningful and finally see what your product can do for them.

No wonder it’s also called the “aha! moment.”

I care a lot about this moment. Because if users don’t reach it, they churn. And if they do, it’s a great time to ask what clicked for them — or what almost didn’t.

This type of survey is all about understanding whether your product delivered on the promise that brought them in. You’re trying to capture the story right when it turns positive — or catch it just before it turns negative.

Here’s how I usually approach it:

📍 Trigger: After the user completes a key action that reflects first value — like publishing something, creating a project, or integrating with a third-party tool.

🎯 Goal: Understand if the user reached value, what that value looked like to them, and whether they had any blockers getting there.

💬 Example Question(s):

- “What made you realize the product was useful?”

- “What were you hoping to accomplish today?”

- “Did anything slow you down or make the process harder than expected?”

- “What almost stopped you from getting value today?”

✅ Why It Works: This survey captures the turning point in the user journey. When timed right, it gives you a snapshot of what real value looks like in their words — and what friction nearly got in the way. These insights are gold for refining activation and messaging.

Example 5: Customer Satisfaction (CSAT) Survey

If I need a quick read on how a specific interaction went, I use a CSAT survey. It’s short, simple, and focused. And it’s one of the fastest ways to catch whether a user experience worked or fell flat.

I don’t just mean support interactions. CSAT also works after completing a task in-app, finishing a workflow, or hitting a mini milestone. It tells me if the user walked away satisfied — and if not, why.

This isn’t a deep diagnostic tool. But that’s the point. It helps me keep a pulse on satisfaction in high-traffic areas of the product. If I see low scores in a certain flow, I know where to dig deeper.

Here’s how I typically run it:

📍 Trigger: Immediately after the user completes a task, finishes a flow, or interacts with support.

🎯 Goal: Measure satisfaction with a specific experience and identify small friction points or gaps.

💬 Example Question(s):

- “How satisfied are you with your experience today?”

- “Did this solve your problem?”

- “How smooth was the process you just completed?”

- “What could we have done to make that easier or better?”

✅ Why It Works: CSAT surveys are fast to answer and easy to interpret. They help you flag issues early without asking users to do much. Great for regular check-ins across the product without slowing things down.

Example 6: Exit or Cancellation Survey

This one hurts — but it’s also one of the most important surveys you can run.

When someone cancels or downgrades, they’re telling you the product didn’t meet their needs. You don’t always get a second chance, so this is your moment to learn what pushed them to leave.

I use exit surveys to spot patterns. Are people leaving because of pricing? Missing features? Lack of value? Bad onboarding? One response won’t tell you much. But after a dozen, trends start to appear. That’s when you can act.

I keep these surveys short. People are already halfway out the door, so I don’t ask for a lot. Just enough to understand their reasoning and maybe open the door to win them back later.

Here’s how I handle it:

📍 Trigger: When the user cancels a subscription or downgrades to a lower plan.

🎯 Goal: Understand the reasons behind churn so you can address them and improve retention.

💬 Example Question(s):

- “What’s the main reason you decided to cancel?”

- “Was there something missing or frustrating about the product?”

- “Did we meet the expectations you had when signing up?”

- “What would have convinced you to stay?”

✅ Why It Works: You’re getting feedback at the moment of truth. It’s honest, often blunt, and incredibly valuable. Even if you don’t win the user back, you walk away with insights that help you keep the next one.

Example 7: Customer Effort Score (CES) Survey

Definition: What is CES?

Customer Effort Score measures how easy it was for someone to complete a task. Lower effort = better experience. High effort is often a warning sign of churn.

Some surveys measure satisfaction. CES measures strain. It asks how easy it was for someone to do something — and if it felt harder than it should have.

I use CES when I want to improve usability or spot hidden friction. A task might look smooth in analytics, but if users are telling me it felt clunky, I dig in. This survey is great after workflows, feature usage, or any action that should feel seamless.

It also gives you early warning signs. If something feels like a chore, people will avoid it — or worse, they’ll leave.

Here’s how I like to run it:

📍 Trigger: After a user completes a core task or flow that matters to product value or adoption.

🎯 Goal: Measure how much effort the user felt they had to invest to complete the action.

💬 Example Question(s):

- “How easy was it to complete this task today?”

- “Did anything make this more difficult than expected?”

- “What part of the process felt the most frustrating?”

- “Is there anything we could simplify or remove next time?”

✅ Why It Works: Effort is a leading indicator of churn. CES surfaces UX issues that might not show up in satisfaction scores. When something feels hard, people remember — and avoid it. This helps you fix that before it costs you users.

Tips for Implementing In-App Surveys

Running a good in-app survey is not just about writing questions. It’s about making the whole experience feel relevant, respectful, and useful — for both sides.

Here’s what I keep in mind every time I build one:

- Keep it short 1–2 questions max. People don’t want a quiz. Keep it tight and to the point.

- Make it timely Trigger the survey right after the relevant action. Don’t wait too long or you’ll lose context.

- Use simple language Avoid jargon. Ask questions the way a human would.

- Match the look and feel Design it to feel like part of the product. If it looks out of place, it feels like a pop-up ad.

- Target carefully Don’t blast the same survey to everyone. Send the right question to the right person at the right time.

- Follow up Close the loop when possible. Let users know their feedback was heard. Even a simple “thanks, we’re working on it” goes a long way.

In-app surveys aren’t just data collection tools. They’re part of your UX. Treat them like any other product experience — with intention, clarity, and respect.

Best tool for running in-app surveys: Refiner

Refiner is an in-app survey software for web and mobile apps, built specifically for SaaS and digital products that want high-quality contextual feedback inside the product, not in random email blasts.

With Refiner, product and CX teams are able to run targeted microsurveys in their web app or native mobile apps, and collect feedback to make better product decisions and improve customer experience based on what their real users are doing.

The whole idea is simple. You drop Refiner into your app, show beautifully branded surveys at the perfect moment, and get highly valuable insights instead of noisy, low-intent feedback. Refiner focuses on perfectly timed in-product micro surveys and gives you advanced targeting so you can always ask the right question, to the right segment, at the right time.

What you can do with Refiner

- Run in-app surveys in your web app and native mobile apps with SDKs for JavaScript, iOS, Android, React Native, Flutter and more.

- Launch multi-channel surveys in-app, by email, or through hosted survey links for users who are not currently in your product.

- Use templates and 12 question types for NPS, CSAT, CES, PMF and other customer experience and product surveys.

- Customize design with comprehensive styling options and custom CSS so surveys match your product’s UI.

- Target users by traits, behaviour, device, country, language or previous responses with a segmentation engine and trigger surveys on specific events.

- Track NPS and other CX metrics over time with recurring surveys and real time reporting dashboards, including AI powered response tagging.

If you are running a SaaS or mobile app and want serious, in-app survey infrastructure for product feedback and customer experience, Refiner might just be the solution you’re after.

In-app Surveys FAQ

A simple NPS survey triggered after a user completes onboarding is a great example. It helps gauge sentiment right when the user has had a meaningful interaction with your product.

Trigger them after meaningful moments — onboarding completion, feature usage, task completion, or cancellation. Timing is everything if you want honest, actionable feedback.

One or two. That’s it. Long surveys don’t get answered. Keep it short, focused, and easy to complete.

NPS, CSAT, CES, onboarding feedback, feature-specific surveys, and exit surveys. Each one serves a different purpose, depending on the user’s journey.

Right audience. Right time. Right question. Plus, a native look and a clear follow-up process. That’s the formula I use every time.