A/B Testing for In-App Surveys

Introduction

A/B testing is a method to compare two (or more) versions of a survey to see which performs better. For surveys, “better” usually means achieving a higher response rate.

While Refiner doesn’t yet offer a full A/B testing suite, you can still set up a simple A/B test for Web and Mobile App surveys with just a few steps.

Create a variation

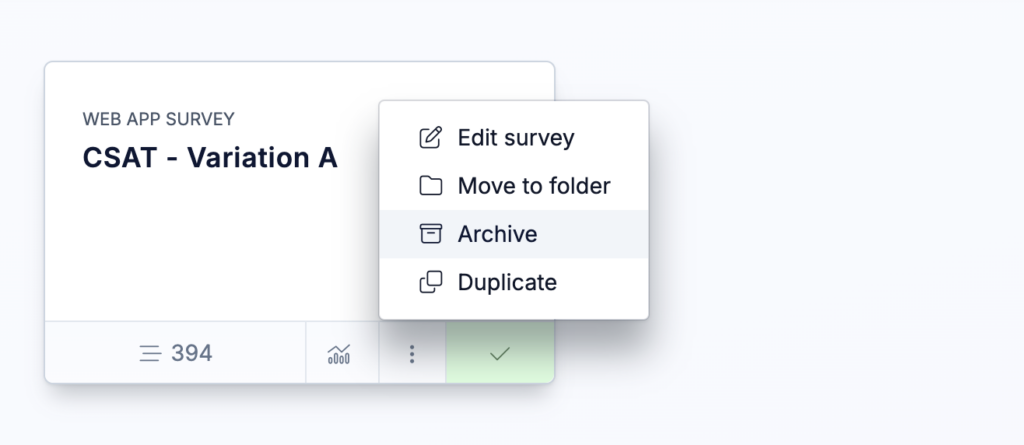

Start by duplicating your existing survey. Open the original survey, click on the three-dots menu, and select the duplication option.

Name the first survey “Variation A” and the duplicate “Variation B.” The duplicate will be created in draft mode, and it is important to leave it that way until you have finished making your changes.

Make changes to variation

Next, edit the duplicate survey. This is where you introduce the variation you want to test. You might change the wording of a question, add or remove a question, or adjust the design of the survey.

Keep the changes small and focused so you can clearly identify which adjustment affects the results. If you change too many elements at once, it becomes difficult to know what caused an improvement in response rates.

The targeting audience and trigger event settings should remain identical to the original survey for now

Exclude variations from Target Audience

When two or more surveys are eligible to be shown to a user, Refiner randomly selects one of them. This randomization is what makes the A/B test possible.

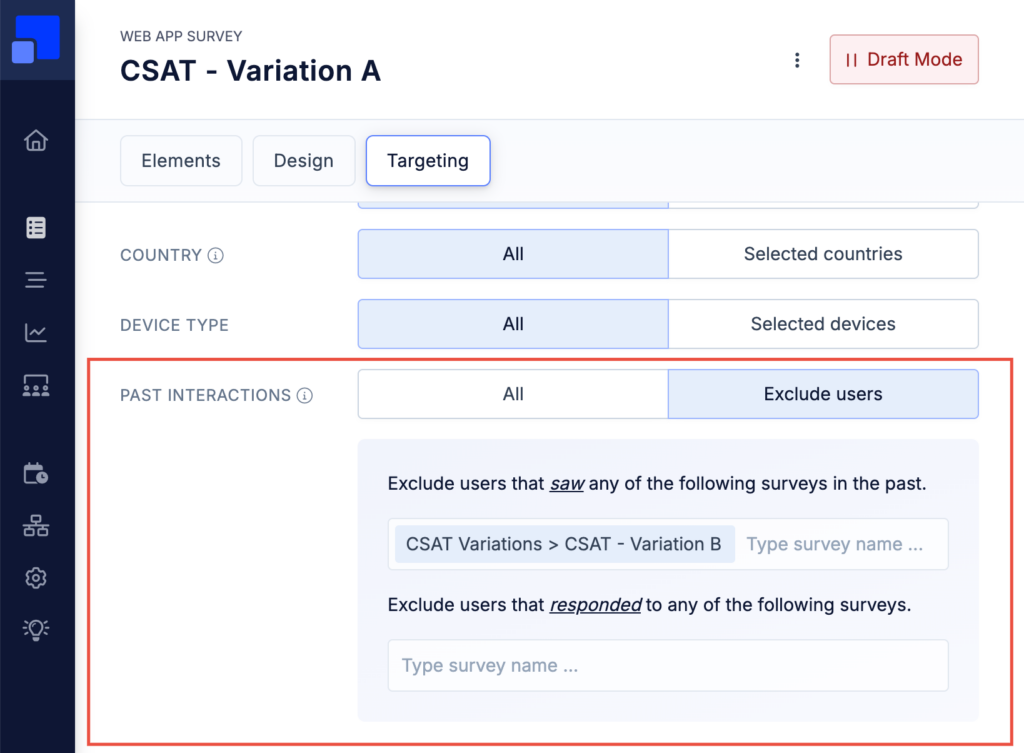

However, without some adjustments, a user could see both versions at different times. To prevent this, you need to exclude users who have already seen Variation A from being eligible for Variation B, and exclude users who have seen Variation B from being eligible for Variation A.

This is done using the “Past Interactions” filter, where you activate the option and specify that users who have already seen the other survey should be excluded. Simply activate the option and choose the other survey variation in the “Exclude users that saw a survey” section. Do this for both survey variations.

Let the test run

Once both variations are ready, publish them. Over time, both surveys will start collecting responses. You can check the user details panel to confirm that each user sees only one of the two versions.

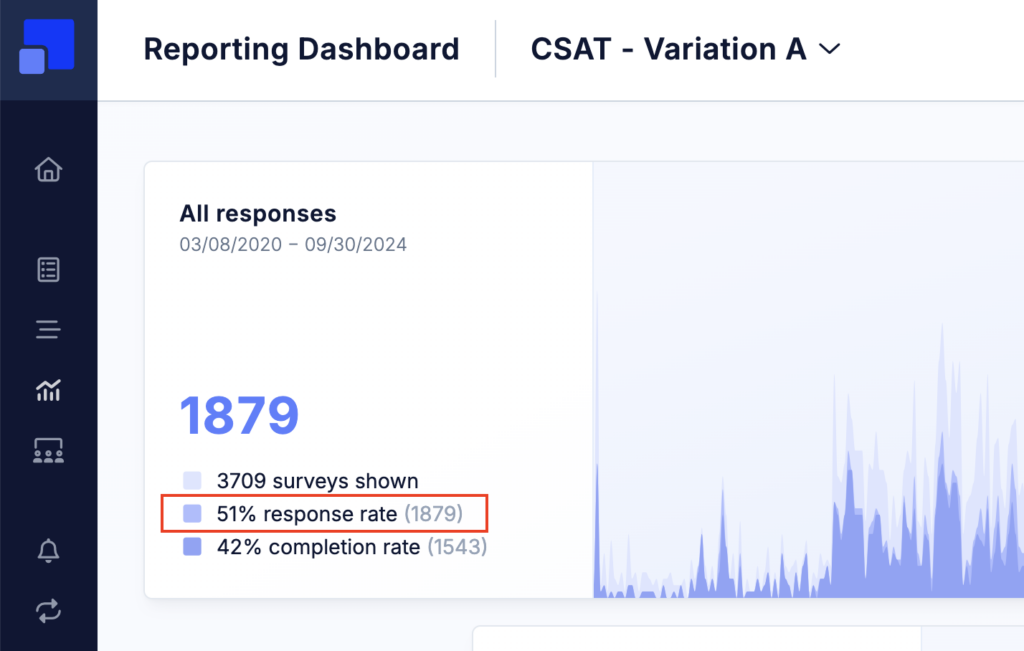

When both variants were shown a couple of hundred times (we recommend at least 1,000 survey views), you can start comparing the results by creating an ad-hoc dashboard for both of your surveys. If one of your variation has a significantly higher response rate, you can choose them them as a winner of your test.